A wireless localization system made to learn from a user's movements over time, map out rooms, and navigate the user without the need of eyesight.

Once we formulated an idea, we created a concept map in order to structure our project timeline. We distributed the workload amidst our group into four main categories: hardware, networking, data analysis, and the web application.

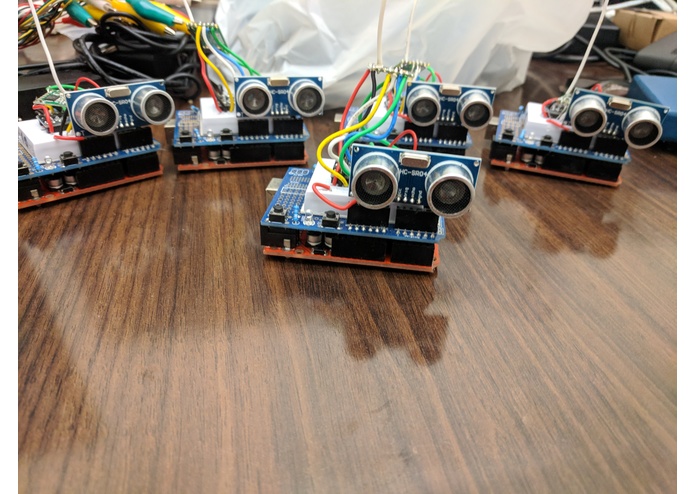

For hardware, we designed a low-latency sensor network using Arduino Uno's equipped with RFM69 radio frequency transceivers. Each transceiver acted as a wireless sensor node that transmitted ultrasonic distance data to a central gateway. We had a minimum of 4 ultrasonic sensors in the room and 1 ultrasonic sensor on the person to retrieve localization data. On the person navigating the room, we also equipped an Arduino 101 with a built in gyroscopic sensor. The combination of data from every sensor component allowed us to infer positions through data fusion techniques.

The DragonBoard was used as the central brain of the network; handling everything from data collection to data processing and fusion. It also works as a gateway bridge between the sensor module hub and the front-end visualization. Once processed, the raw data from the sensors is converted to X and Y coordinates which were used by the computer to draw a map of the current room over time. This data was stored in MySQL for ease of querying.